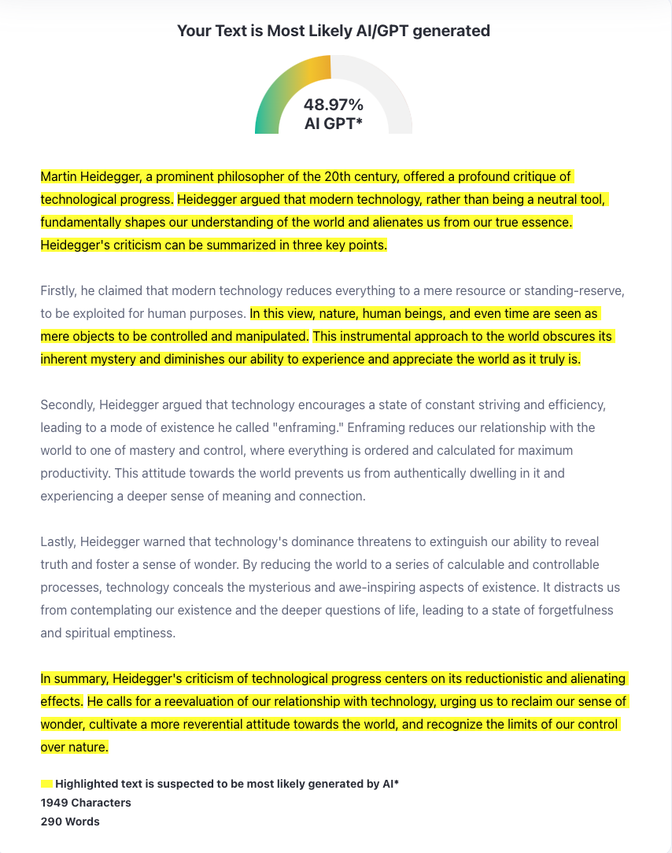

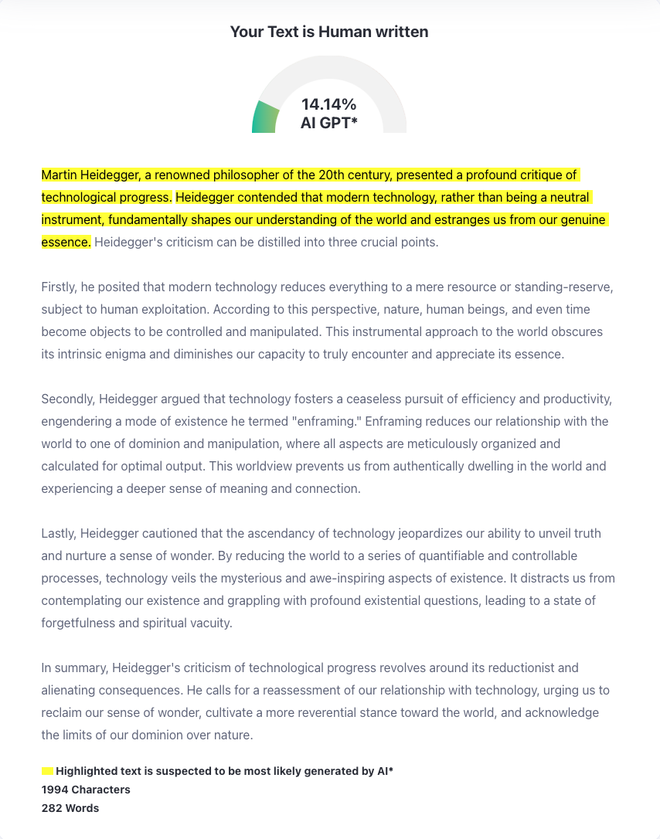

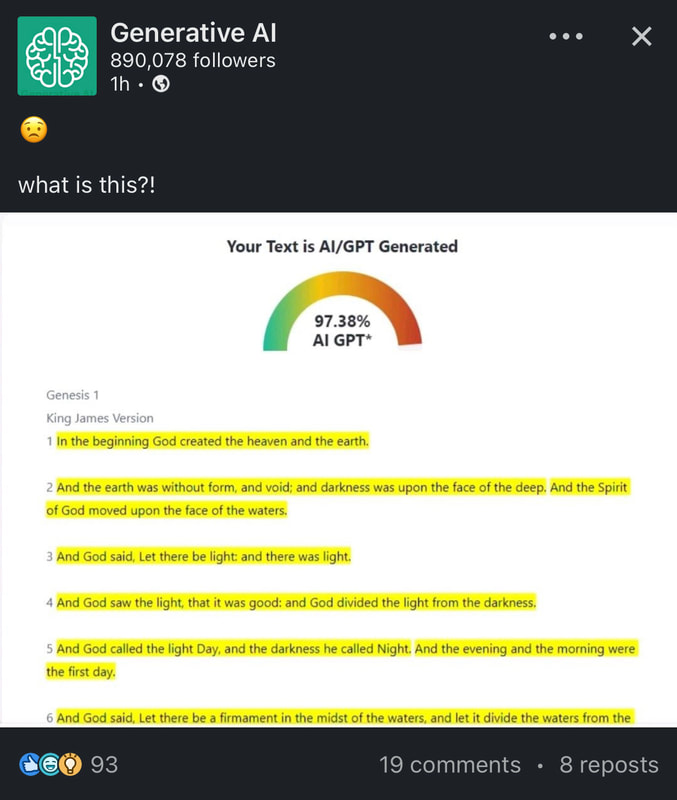

As ChatGPT started to go viral, an obvious concern among teachers was the potentially destructive impact in education of this technology, which made “cheating” more tempting than ever before. With their generative AI capacities, ChatGPT and similar bots seemed to have created a new form of plagiarism that was so easy, effective, and “safe” (undetectable), as to make many forms of assessment obsolete. To the relief of many, AI-detectors were made available almost as immediately. Yet, as a simple experiment demonstrates, these tools might soon be doomed, if they are not already useless. Is "fighting AI with AI" a pointless pursuit? For the purpose of this experiment, I asked ChatGPT to "summarize Heidegger's critique of technological progress" (I couldn't help myself). As we would hope and expect, the AI-detector ZeroGPT indicated that it was "most likely AI generated", with a score of 49%. However, a one-sentence prompt was enough to bring the score down by over 33 percentage points, and to lead the AI-detector to conclude that the text was "human written". If necessary, reiterating the process with the same prompt could even bring a 100% AI-generated text to a score of 0%. To be fair, these modified texts would sometimes alert a human reader. For some reason, trying to get chatbots to sound more human can make them sound like a student trying too hard to sound “smart” and ending up using words that are a little too big, a little out of place, and that they do not really understand. But it would be quite easy for a student to tone the prose down and remove the most awkward and "telling" choices. The prompt I used was also quite simplistic. I am not sharing it here, because AI is already disruptive enough for teachers, but I would not be surprised if similar, and more effective, anti-AI detection prompts were already available to students. Essentially, AI-detectors look at how predictable and consistent a piece of text is. What bots such as ChatGPT do is predict word after word based on large amounts of texts and probabilities. If a text strings together exactly the kind of words that AI would expect, then it is likely AI-generated. And even more so if the length and structure of the sentences is a little “robotic”. To avoid detection, one can thus ask ChatGPT to rewrite a piece of text it just generated and introduce greater variety and less predictability in words and sentence structures. Tweaking the prompt can even help avoid the “overly verbose student” syndrome mentioned above. Using this prompt is very much akin to raising the “temperature”, which is an option in the non-chat version of GPT and lowers the probability (increases the randomness) of the bots’ chosen word associations. All the prompt does, really, is reverse-engineer a way to make AI-generated text undetectable by looking at how AI-detectors work–which is ironic, given that these AI-detectors were reverse-engineered based on generative AI’s algorithms in the first place. This irony is an indication of the first reason why AI-based AI-detection might be a pointless pursuit: not only will it always be possible to bypass it using AI, AI will actually always be a step ahead, simply because they are both using its underlying technology. Even without the kind of prompts I mentioned, students can already instruct AI to match their unique writing style. More generally, the more generative AI progresses, the better it will be at mimicking natural, human language; and the less detectable it will become. At present, OpenAI, the company behind ChatGPT reports that its AI-detector "correctly identifies 26% of AI-written text (true positives) as “likely AI-written,” while incorrectly labeling human-written text as AI-written 9% of the time (false positives)". "The truth is, the more generative AI progresses... the less detectable it will become. " Second, and most importantly, even if it was possible to develop an AI-detection technology that users could not bypass with the help of AI, this would actually not solve our problem. Imagine a teacher confronting a student and showing them that their essay was found to be “entirely written by AI”, with a score of 100%. Now, imagine that the student stands their ground and remains adamant that they wrote the piece. This could be a false positive. As mentioned above, AI-detectors sometimes miscategorize human-written passages. Whether such is the case or not, the point is that there is no way to know or establish the truth either way–at least not with AI. When a student plagiarizes, they use an existing text and make it pass as their own. It is thus possible to find and produce evidence of their cheating: the original text. When a student uses AI, however, they prompt the creation of an original text, which does not leave an identifiable trace we could point to and say “you plagiarized this”. Detectable or not, AI plagiarism is thus not demonstrable. It is much closer to situations where students use someone else’s services to do their work for them, except on a much larger and more easily disguised scale. All AI-detectors can do is say: this reads exactly like something AI would write. Yet, except for very specific assignments in very specific classes, personal “voice”, style, and not sounding like a chatbot are not part of the standards being assessed. So, without definitive proof, what is there to say? The idea of working a "watermark" into AI-generated texts could seem to offer a solution. Yet, beyond their intrinsic limitations (such as being easy to trick with simple reformulations, or even the use of paraphrasing tools), such keys need to remain secret, and thus can only be used by specific companies to detect their own content. AI-generated could technically be saved and made available for plagiarism checks, but the usual technique faces insurmontable obstacles here: proprietary information and data privacy legislation. "Detectable or not, AI plagiarism is not traceable, and thus not demonstrable." AI-detection might be a problem that is impossible to solve. However, such dead-ends are also fantastic opportunities because, by forcing us to take a step back and reflect, they help open new perspectives, and often surface and address more fundamental issues. In that light, here is a number of questions for further consideration:

Image created on Deepai with the prompt "2 dollar bill, square 320x320".

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

|

Proudly powered by Weebly