In his Republic, Plato used the tale of the ring of Gyges to ask the question: would we do what is right if we had access to an artifact that made us invisible? Arguably, AI is such a magical device, making it possible for students to generate answers to any questions that are good enough to get them the grades they want, and undetectable. In this context, educators need to adapt and rethink their practice. How can we ensure assessments are useful and reliable, when students can so easily offload their thinking onto chatbots’ machine learning? Or, in other words, how can we actually teach and evaluate anything in the matrix? Summary

Back to the Future: The Temptation of AI-Proof Assessments Paradoxically, there is a very real risk that the generalized and undetectable use of AI by students prompts a return to old-fashioned, low-order thinking assessment practices. While tech-integration made it easier to move await from in-class tests, a misplaced reaction to the rise of artificial intelligence might cause a drastic and unfortunate rebound. Or even worse, it could incentivize the deployment of extensive AI-based student surveillance. However, trying to develop “AI-proof assessments” would likely be a losing long-term strategy. First, playing catch up with artificial intelligence seems an exhausting and desperate attempt. Of course, short-term solutions exist based on current limitations of the technology. For instance, although AI can already perform text-to-diagram tasks, its use to that end remain cumbersome, its capabilities limited, and its products highly unreliable. Thus, students can still be asked to answer a question with a mindmap with a good probability that this assessment will evaluate their own thinking. Yet, this approach is short-sighted. Not only because AI will continue to make progress, but also because such examples only meet two of the three criteria of what a good assessment will look like in the future. In a world where AI is pervasive, assessment will need to:

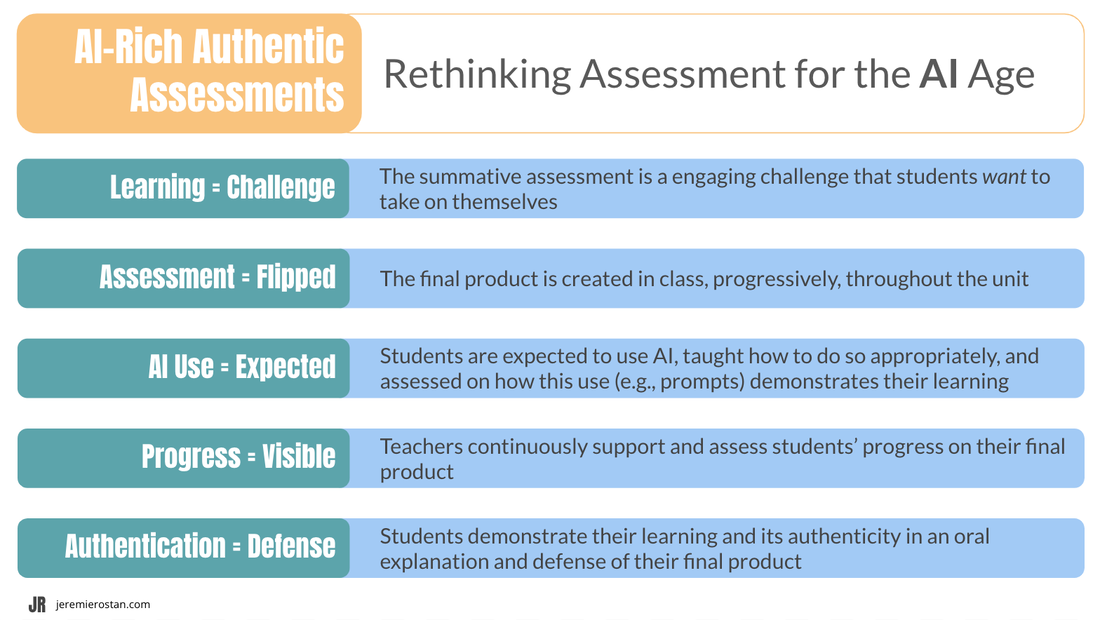

Long-Term Vision: Educating AI-Proof Students Rather than AI-proof assessments, we should thus help teachers develop AI-proof students, i.e., students who are neither AI-dependent, nor AI-illiterate. To do so, we need to develop an approach that solves the following dilemma: Students will (and should) use AI to complete their school work. Yet, teachers must be able to ensure and evaluate that they learn in the process. Fortunately, as is often the case, having two problems means that we can use each of them to solve the other one. To put it simply, one promising solution is to turn the assessment itself into an opportunity for students to demonstrate how they used AI to develop targeted understandings and skills. The model below attempts to do so in a way that addresses the root causes of "cheating", such as low engagement or low perceived support and efficacy, which AI-plagiarism only helps reveal. It deviates in significant ways from traditional teaching and learning, and combines aspects of more modern approaches, to which it adds its own innovations, updating them for the AI age. In doing so, it helps solve many of the problems linked to AI-integration, all while reaping its benefits. The summative assessment is an engaging challenge that students want to take on themselves.

Authentic and relevant assessments are not new, but they are absolutely key, because they help ensure cheating risk is low and learning chances are high. The summative assessment is completed in class, progressively, throughout the unit. Just like in Project-Based Learning, the summative assessment, or learning challenge, is “flipped”, broken down, and integrated eith learning activities and formatives, which become steps, milestones, and successive versions of a final product. This helps ensure students demonstrate their learning, and its authenticity, as they develop it. It also helps support executive skills, such as planning, and again ensures cheating risk is low and learning chances are high. Students are expected to use AI, taught how to do so appropriately, and assessed on how this use (e.g., prompts and process) demonstrates their learning. The students’ progressive creation of their summative can of course include explicit instruction, modeling, as well as guided practice. In particular, students must learn how to use AI appropriately, for instance by using some of the AI-powered UDL strategies outlined here. They are required to explain how they did so, and assessed on the understandings and skills demonstrated by their prompts, evaluation, and modifications of AI products. Teachers continuously support and assess students’ progress on their final product. Similar to a Studio Learning approach, teachers continuously assess the students’ summative in progress, as well as peripheral but crucial skills and dispositions (such as planning, collaboration, and resilience). These feedback sessions can take multiple form: informal observations, formal 1:1 conferences, looking at intermediate products, peer feedback… Such interactions help teachers support and authenticate student learning, and can be opportunities for individual support and assessment, even in the case of group projects. Once again, flexible support, pacing, grouping, and other UDL elements reduce cheating risks and increase learning chances. Students demonstrate their learning and its authenticity in an oral explanation and defense of their final product. This approach does not only avoid the "invisible middle", but also redefines what is meant by final submission. Students do not simply turn in a finished product, but present it, explaining their process (including AI use) and the thinking and learning behind it. This can range from a few questions checking its authenticity to grandiose showcases. Properly facilitated, this constitutes a marked improvement over traditional summatives. Indeed, summatives are always proxies. What teachers should really assess is not the work, but the learning that it demonstrates. Yet, this demonstration is not always clear, and oral defenses can help clarify teachers’ understanding of students’ understanding. They are also fantastic opportunities for peers to learn from each other. To reap these benefits, however, these should not be typical “class presentations”, where the presentation is the summative, but more similar to grand rounds, where experts present on and explain a learning experience. Interestingly, the latter can ensure that students learned and achieved the standards even if they used AI extensively to do so and to create their final product. The model described above is, of course, an early blueprint for what AI-rich, authentic assessments might look like. The next step will be to design a more detailed prototype, likely on an extensive piece of writing, i.e., the kind of assessment where the risks and benefits of AI-integration seem to be both very high.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

|

Proudly powered by Weebly